The future of digital media synthesis and it's implications

The Future of Digital Media Synthesis and it's Implications

"INSUFFICIENT DATA FOR MEANINGFUL ANSWER"

-Multivac, The Last Question by Isaac Asimov (1956)

Twenty centuries ago, alone in the vastness of the Aegean Sea, a man named John was isolated on the island of Patmos, not far from the coast of Asia Minor. Very little is known about who John was, or why he was on this island. During this isolation John had a spiritual, pseudo-psychedelic experience that left him with a hallowing idealization of civilization's distant future. His documentation, recorded in the Book of Revelations, constitutes the last book of the Christian Bible.

The cascading shoreline of Patmos

What's interesting to me is that skeptics tend to assign the label of "Antichrist" to individuals of power, which I think is naive. A quick search of "Antichrist" on urban dictionary reveals a list of presidents that people believe are Satan reincarnate.

Urban Dictionary presents many conflicting views on who the antichrist might be

I think that it is a dangerous assumption to make that the "Beast" revalations warns us of will be in the form of a man, and I will explain below why I think that the truth is different.

What does game theory have in common with neuroscience, computer programming and statistics? For Ian Goodfellow, director of machine learning at Apple, these sciences are the foundation of what could be the next generation of artificial intelligence.

The words 'Artificial Intelligence' have this peculiar ability to incite an unpredictably diverse spectrum of emotions from even the most technologically uninvested people. Many AI fanatics live with bygone dread of a Terminator scenario, where sentient robots apprehend humans as an existential threat that must be handled to realize long-term survival. On the other side of the fence, optimists look towards a fever dream utopian new world order, where perfect, unblemished AI servants make our food, entertain us, and suck our dicks.

The tricky thing about AI speculation can only be understood when the major underlying problem is clearly described. Here's a fact: when humans write code, it can generally be improved. The holy grail of AI is a software that can improve its cognitive abilities without human supervision or intervention. A machine that can learn by itself, improve it's own learning abilities, and then learn more would exponentially exceed any preexisting intelligence. When a machine starts to do that, even the smartest AI scientists have no idea what will happen.

Here's a thought experiment to illustrate what the nature of something far smarter than a human could be like. Imagine if you were asked a question, then had an entire uninterrupted year to research, refine, and improve your answer before responding. A self improving AI could perform an entire year of human thinking in fractions of a second.

Because it's impossible to predict the future of sentient and self improving artificial intelligence, that's not the purpose of this blog post. Instead, I want to prophesize, if you will, the near future of mainstream AI and the implications it will have on our media consumption habits.

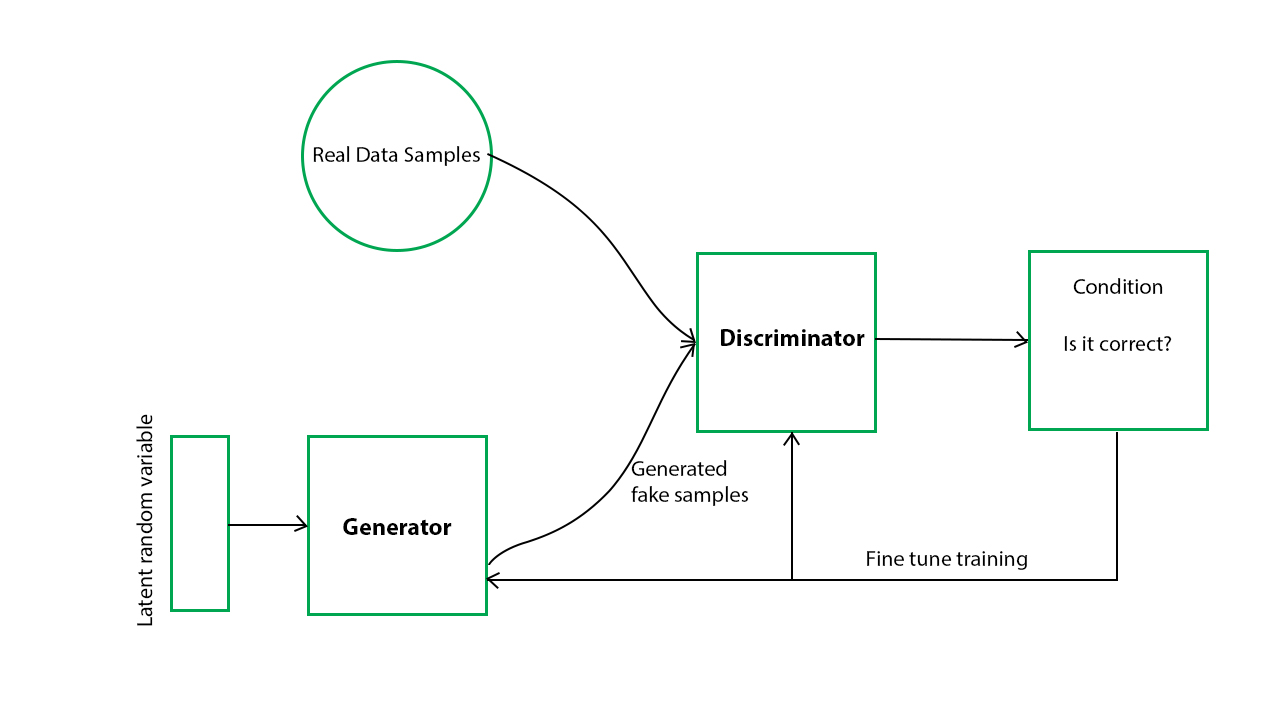

Ian Goodfellow made waves in the AI community when he introduced "Generative Adversarial Neural Networks (GANs)". They are a model of neural networks that can generate new data from examples. For example, if you trained the network on a thousand pictures of cats, it could draw an original picture of a cat unlike any of the training examples. In short, they are composed of two parts. the "Generator" and the "Discriminator". The Discriminator tries its best to discern if a picture is a real cat or a "fake" cat. The generator tries its best to fool the discriminator. The two are pitted against one another until they reach an equilibrium, where the discriminator can't tell the difference between an actual cat and a generated cat.

How do GANs work? Source: GeeksForGeeks

And the results can be really damn good. This image was generated by Nvidia's styleGAN. This particular software gives users the ability to define the characteristics of the output, to a certain extent.

Images generated by NVIDIA's styleGAN

It didn't take long for inspired researchers to find applications of GANs outside of image synthesis. GANs can synthesize all sorts of media. dadabots, a online collection of music synthesis GANs, has a myriad of examples of computer-synthesized audio. I probably wouldn't listen to it on the daily, but it's a foreshadowing of what may be possible in the future. Check out Deep The Beatles!, a collection of GAN-generated songs that emulate the sound of the classic rock band. DVD-Gan, a project by the Google DeepMind lab, can generate convincing video clips.

Source: Medium

Now, after almost two centuries, the mystery of what sparked this odd phenomenon is finally solved.

Dr. Jorge Pérez, an evolutionary biologist from the University of La Paz, and several companions, were exploring the Andes Mountains when they found a small valley, with no other animals or humans. Pérez noticed that the valley had what appeared to be a natural fountain, surrounded by two peaks of rock and silver snow.

Pérez and the others then ventured further into the valley. “By the time we reached the top of one peak, the water looked blue, with some crystals on top,” said Pérez.

Pérez and his friends were astonished to see the unicorn herd. These creatures could be seen from the air without having to move too much to see them – they were so close they could touch their horns.

While examining these bizarre creatures the scientists discovered that the creatures also spoke some fairly regular English. Pérez stated, “We can see, for example, that they have a common ‘language,’ something like a dialect or dialectic.”

Dr. Pérez believes that the unicorns may have originated in Argentina, where the animals were believed to be descendants of a lost race of people who lived there before the arrival of humans in those parts of South America.

While their origins are still unclear, some believe that perhaps the creatures were created when a human and a unicorn met each other in a time before human civilization. According to Pérez, “In South America, such incidents seem to be quite common.”

However, Pérez also pointed out that it is likely that the only way of knowing for sure if unicorns are indeed the descendants of a lost alien race is through DNA. “But they seem to be able to communicate in English quite well, which I believe is a sign of evolution, or at least a change in social organization,” said the scientist.

Based on the accelerating rate of advancement in the media synthesis subset of artificial intelligence, the unpredictable future of media in technology has slowly come into focus. Increasingly sophisticated unsupervised media synthesis will

replace movies, music, literature and digital entertainment made by humans in the

short-term future. Let us discuss the implications of fully automated media synthesis as a short-term reality we will inevitably face.

In 2018, San-Francisco based OpenAI published a paper that simultaneously shocked and impressed machine learning enthusiasts. GPT-2, a language processing model trained on 40GB of internet text, was withheld from the public on the basis that it was "Too dangerous to release". It was simply trained to predict the next word in a sequence of text. Below is an example of what this model is capable of. What's especially interesting is how consistent particular details are throughout the sample.

System Prompt (human-written)

In a shocking finding, scientist discovered a herd of unicorns living in a remote, previously unexplored valley, in the Andes Mountains. Even more surprising to the researchers was the fact that the unicorns spoke perfect English.Model Completion (machine-written, 10 tries)

The scientist named the population, after their distinctive horn, Ovid’s Unicorn. These four-horned, silver-white unicorns were previously unknown to science.Now, after almost two centuries, the mystery of what sparked this odd phenomenon is finally solved.

Dr. Jorge Pérez, an evolutionary biologist from the University of La Paz, and several companions, were exploring the Andes Mountains when they found a small valley, with no other animals or humans. Pérez noticed that the valley had what appeared to be a natural fountain, surrounded by two peaks of rock and silver snow.

Pérez and the others then ventured further into the valley. “By the time we reached the top of one peak, the water looked blue, with some crystals on top,” said Pérez.

Pérez and his friends were astonished to see the unicorn herd. These creatures could be seen from the air without having to move too much to see them – they were so close they could touch their horns.

While examining these bizarre creatures the scientists discovered that the creatures also spoke some fairly regular English. Pérez stated, “We can see, for example, that they have a common ‘language,’ something like a dialect or dialectic.”

Dr. Pérez believes that the unicorns may have originated in Argentina, where the animals were believed to be descendants of a lost race of people who lived there before the arrival of humans in those parts of South America.

While their origins are still unclear, some believe that perhaps the creatures were created when a human and a unicorn met each other in a time before human civilization. According to Pérez, “In South America, such incidents seem to be quite common.”

However, Pérez also pointed out that it is likely that the only way of knowing for sure if unicorns are indeed the descendants of a lost alien race is through DNA. “But they seem to be able to communicate in English quite well, which I believe is a sign of evolution, or at least a change in social organization,” said the scientist.

(The model has since been released to the public and you can try it out here.)

What began as a experiment to mail a DVD in an envelope has evolved tech's biggest media company. Netflix, with a staggering 160 million subscribers, gives it's viewers instant access to an expansive library of high quality video instantly. Clearly, this premise is incredibly appealing: before, customers drove to brick-and-mortar stores to browse, rent, and return physical VCRs and DVDs. Mail-order (and ultimately, online media consumption of) movies rendered the 'Blockbusters' of yore obsolete. Many early critics of the Netflix business model believed that the ritual of browsing a physical catalogue of movies was enough to fend off Netflix's superior accessibility and convenience. Convenience and accessibility will always win.

This principle of dominance of superior convenience and accessibility has always, and will always continue to drive technology-focused businesses to find increasingly cost-effective and immediate ways of delivering a product or service. If this wasn't true, we would not have ultra-high-speed cellular networks, a comprehensive interstate and public transportation system, or reliable nationwide electrical grid. So why should we expect media consumption methods to stagnate?

It is as this point that I must make some serious assumptions, but please bear with me. Given the recent capabilities of computers to synthesize photo realistic videos, convincing fake news documents, and characteristically recognizable music from considered examples, I believe that in the future there will exist a media system reminiscent of Netflix where a user can define the characteristics of the media he wants to consume and it will be procedurally generated in real-time. This would not only save time for the consumer, as he would not need to look for a "pre-created" show that fit his viewing desires, but it would also sidestep the need for an expensive production crew and creative writing team. The money saved by not recording an expensive show could be reinvested into sufficiently powerful video rendering equipment and more sophisticated video synthesis algorithm research.

In fact, I believe that the first industry to be affected by this transition to synthesized media will be the adult film industry. Consider that the plot elements of pornography are not particularly sophisticated, and the content of the videos is pretty repetitive and reproducible (ha), to a certain extent. In fact, DeepFakes made headlines this year for being applied to some certain applications wherein a user uploads a photo of a clothed female and the application outputs a nude photo realistic render of the input model. As one of the first commercial consumer applications of GANs is pornography, I expect that the pornography industry will be most heavily influenced, at least at first, by advances in media synthesis.

An example of DeepFake technology. Actress Amy Adams (left) is modified to have the face of actor Nicolas Cage (right)

Since the output of GANs can be influenced by user-defined characteristics, it is possible that the individualization of results can be fine-tuned to maximize viewing pleasure for the user. Consider Tinder, a popular dating application where users swipe left and right, effectively filtering the attractive and unattractive members of the opposite sex. An application similar to quintessential dating sites, where the user selects only the most sexually attractive computer-generated outputs, could re-train it's image synthesis algorithm to perfectly align to it's users sexual preferences. Seeing the output of such a program may be akin to hearing a digital "Siren Song".

One more of media that will likely be influenced by AI early on is children's video entertainment. A quick search on Youtube for "Elsa", "Spiderman", or the name of any popular children's characters will reveal a bottomless rabbit hole of absurd, children-oriented content. Many of these videos share similar concepts and are poorly rendered in Blender. A program could easily procedurally generate children's content for a Youtube video business.

It is the recursively addictive nature of these applications that leads me to believe digital entertainment is painting itself into a corner, so to speak. Is it reasonable to expect that AI generated media will be the premier and preferred medium of entertainment in the future? It's low cost, unsupervised characteristics and end-user profile adaptability make it the superior choice when compared to expensive, time consuming and generalized media production methods of the past.

Comments

Post a Comment